2024 Industry Lessons

Layoff

2024 started off as a rough year for me: in January I was laid off from my job, and 3 days later I lost my mentor. I had a tough time getting back on my feet, and I was not alone. In the past 3 years over half a million people in tech companies were laid off, with an average of about ~185.000 / year. After going through my experience and coming out on the other side, I wanted to share some of my thoughts.

First things first: getting laid off sucks. It definitely does no wonders for your need for stability and financial security. Mentally, it messes with your sense of competence, when in reality it does not reflect on your skills or worth. If you were laid off you need to realise that the company can't afford to pay you, it has nothing to do with your worth, no matter how much your brain tries telling you otherwise.

The biggest tech layoffs happened at FAANG companies, where it's already notoriously difficult to get in. The people that were laid off had above average talent and the remote job market became fiercely competitive.

Tech companies seem to be adopting a strategy of doing more with less, which means that they are reducing the amount of people on payroll and expecting engineers to be able to work in multiple different areas. So it's a pretty bad time to be all in on a single technology or skill, especially if it's a niche. And so, in accordance with Murphy's Law, this is exactly the position I found myself after getting laid off: despite having some experience in Java, Scala and C, if you looked on my resumé you'd see I had only been paid to write Erlang and Elixir.

The 1 Billion Row Challenge (1brc)

Earlier in the year our industry collectivelly enjoyed the one billion row challenge. This challenge was deceptively simple, and it grew far beyond the Java bubble from where it started. There were people in the Erlang and Elixir space trying their best to optimize already great solutions, which I followed attentively. I had a go at it, and I had my fun "optimizing" and benchmarking. For fun, I decided to ask ChatGPT to give me a Rust program that did the same. After hammering out all of the unexistant functions and making the compiler happy, I was shocked to see that the simple, single-threaded solution ChatGPT had came up with was a few seconds faster than my multi-threaded solution in Elixir.

Up until this point I had the mentality that the fastest programming language was the one I was fastest at, which is basically the argument that developer time is more important than execution time. However, the performance delta from Elixir to Rust was just too big to ignore.

After I got my top result of around 35 seconds,

I went to the official (Java) 1brc repository and saw that the in top solutions there's an implementation that takes less than three seconds to complete,

doesn't use a custom VM, native binary or unsafe. On the other hand, the reference implementation time was over 4 minutes,

which was on par with my first solution.

Energy Efficient Programming Languages

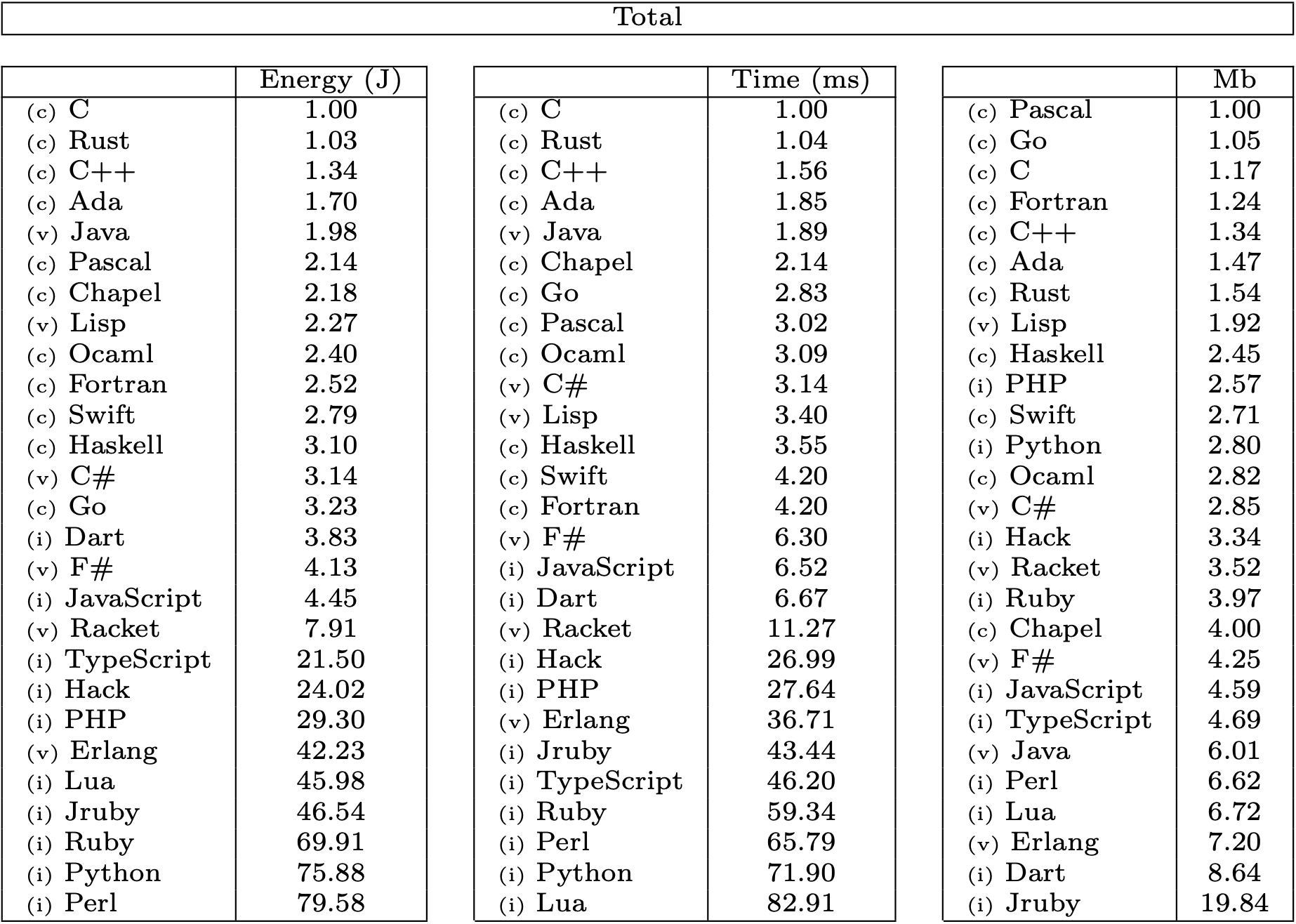

A 2021 paper out of Portugal(!) ran an interesting experiment of measuring the performance and energy consumption of the same set of programs across different programming languages. The results they found were thought provoking:

I'm not going to go on a rant about what is the best programming language, and no serious person would look at these results and suggest we'd write everything in C. These results also don't tell us which language is faster; different implementations might explain why C++ is seemingly slower and less efficient than C and Rust, but it's harder to explain the chasm that separates the top 5 languages and the rest of the list. Here's what stood out to me:

Compiled Languages

I'd wager most people would be able to guess correctly that compiled languages are faster in general than languages running in a VM or interpreted languages, but personally I thought the difference betweeen them would be significantly smaller. I suspect the difference is significantly smaller for IO bound applications, where many of the VM and interpreted languages are used successfully, but this just underlines that for compute tasks, if performance matters, a compiled language seems to be a good choice.

Java

Java seems to be the only language that seems to punch above its class and have a performance that is comparable to compiled languages - it's a great example of how far other languages can go with the right budget and engineering effort applied to optimizing the runtime.

Java's results are good news for other JVM languages: people working with Kotlin, Scala or Closure should be very pleased with these results.

Rust

Most of the languages in this ranking are ancient, and despite being one of the youngest, Rust seems to be on par with C and C++ in terms of performance.

When new languages come out they have "features" that sometime are more endgoals then current features. Rust mentions zero-cost abstraction and efficiency, and these results seem to validate those claims to an extent.

Hardware

Regardless of what you think about the microbenchmarks on which the paper is based for the results, it's a fact that there are performance differences between languages. Take the BEAM as an example: since R16 at least there has been a move inside many modules in the standard library from native Erlang implementations to C NIFs.

Companies deploying at scale already care about this.

C applications running on a thousand servers would need up to 75.000 servers to run in Python, and vice-versa.

Of course, in practice things are never this drastic - it's typical for interpreted languages to shell out to binaries

(e.g. ffmpeg), even for Elixir; this reduces some of the waste, but it's not clear how much of it remains.

What does a layoff, the 1BRC and an academic paper have in common?

Although it seems like the start of a bad joke, all three things provided a learning moment for me about the same reason. Experiencing each of these things made it obvious to me how I had become complacent. After finding a niche I enjoyed, I convinced myself I didn't need to practice anything else as long as I kept getting better.

Going through the layoff, I went through some hardship proving my competence as an Backend Engineer in anything that wasn't Elixir or Erlang. I had some familiarity with other languages, but my lack of knowledge of standard libraries, frameworks and side projects in other languages made it so that pretty much all of the interviews I had for other languages didn't translate into job offers. It's hard for an employer to justify taking a risk on someone with no proven experience when there are 5 or 6 other candidates that have that experience.

The 1BRC taught me that there is value in learning how to profile and benchmark code properly. Taking a naive solution from 5 minutes down to 35 seconds involves doing multiple iterations, using tools to figure out hotspots in code and figure out ways to either make them faster or rewrite them in a quicker way. Going through this process is valuable for the many insights you get about how to use a programming language in a performant context. When all that you care about is learning how to ship every feature imaginable, it's easy to get into a perpetual mindset of "let's ship now and optimize later". Doing the challenge taught me that it's important to take time to figure out how to do things in a performant way for when you need to pull that lever.

Finally, the academic paper showed me that there's plenty of languages out there that are worth learning and trying out:

- There's a reason Java is an industry standard: it outperforms everything in its class, so it makes sense that it's still very much in use today. "Write once, run anywhere" isn't as relevant today, but it seems to have come a long way since the Java 8 I used back in college, now that it has pattern matching, immutable objects, green threads, and much more.

- There are new standards for the ancient languages, C23 and C++23: each of them slowly fixing some of the issues with previous standards of those languages. It's hard learning these languages by example (e.g. reading source code) because much of what's out there isn't written using the new standards, but there are a couple of books that promise a gentle introduction to them, both for C and C++.

- Rust seems to be a performant language with important security guarantees that might be worth the time investment. Zig was a notable omission from the paper, but it was probably due to it being under active development. Both of these languages have an additional benefit, which is that you can write BEAM NIFs with them using rustler and zigler!

TL;DR

- If you were laid off, your new fulltime job is to apply to jobs. Use all the referrals you can and don't give up.1

- Practice programming as often as possible, try out code katas.

- Practice programming in languages you don't use at work. Practice with languages that have big industry adoption if you work in a niche.

- Learn how to profile and optimize code for when you need it.

- Build small, practical projects in other languages and keep them public so that you can point back to them if you want a job working with a new language.

Footnotes

-

It took me almost 3 months and more than 45 applications to land a new job. It was brutal. ↩